Install Tensorflow 2.0.0 on Ubuntu 18.04 with Nvidia GTX1650/ GTX1660Ti | by Pratik Karia | Level Up Coding

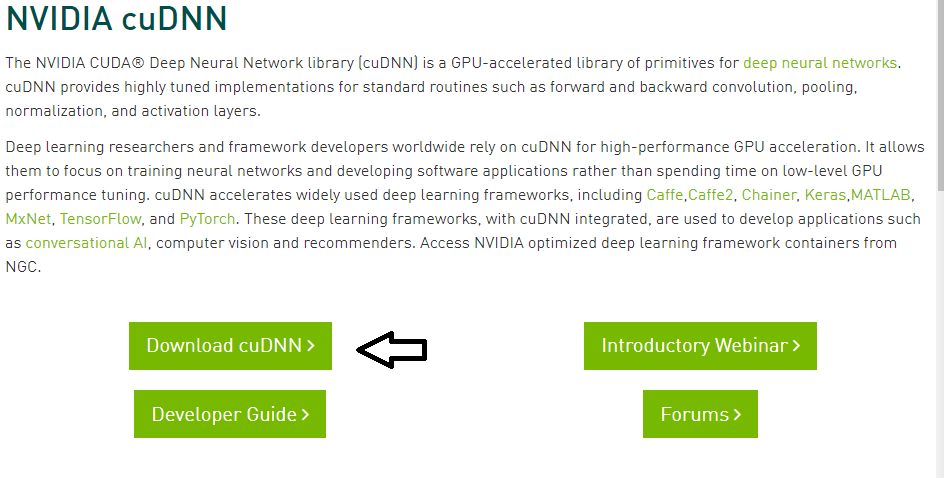

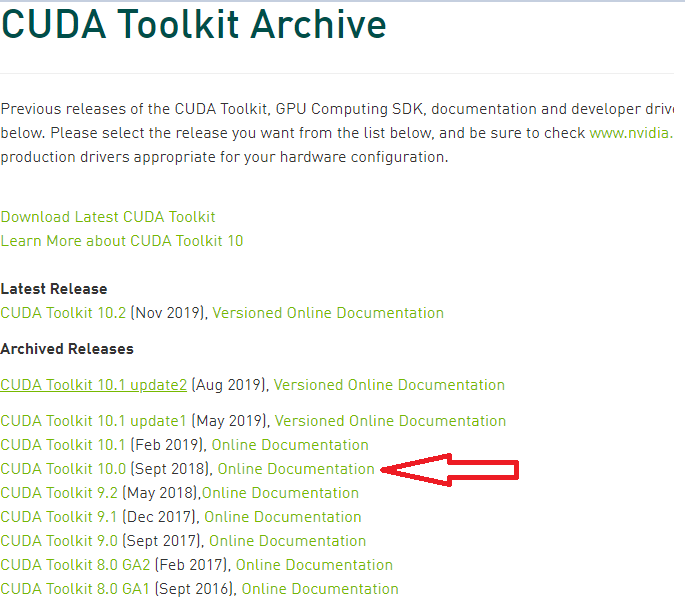

Install Tensorflow-GPU 2.0 with CUDA v10.0, cuDNN v7.6.5 for CUDA 10.0 on Windows 10 with NVIDIA Geforce GTX 1660 Ti. | by Suryatej MSKP | Medium

Install Tensorflow-GPU 2.0 with CUDA v10.0, cuDNN v7.6.5 for CUDA 10.0 on Windows 10 with NVIDIA Geforce GTX 1660 Ti. | by Suryatej MSKP | Medium

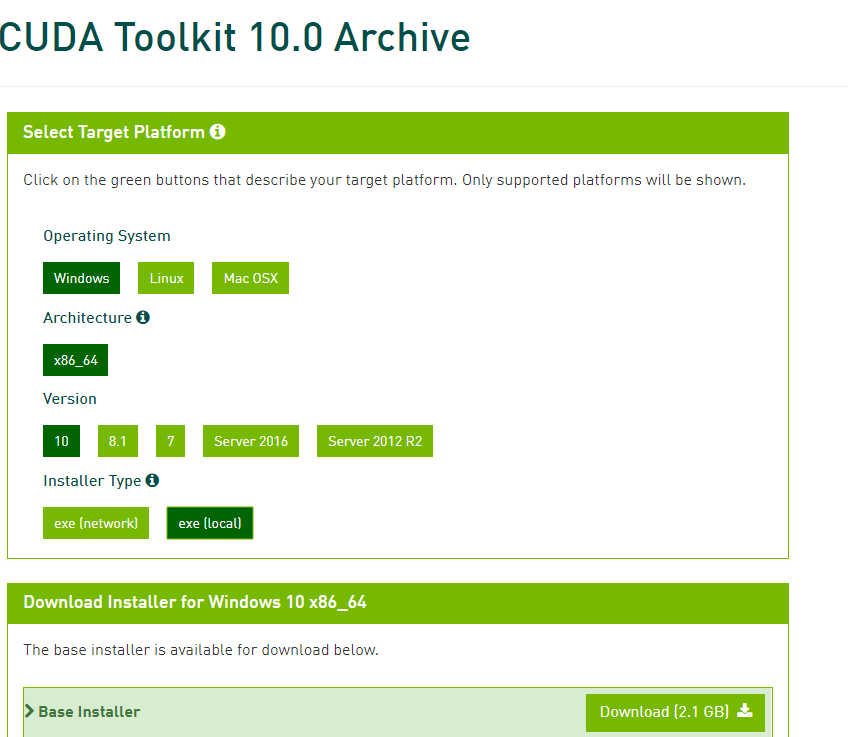

1080TI better than 2080TI??? Gigabyte GeForce RTX 2080TI Ultrareview: Tensorflow, mining, gaming - YouTube

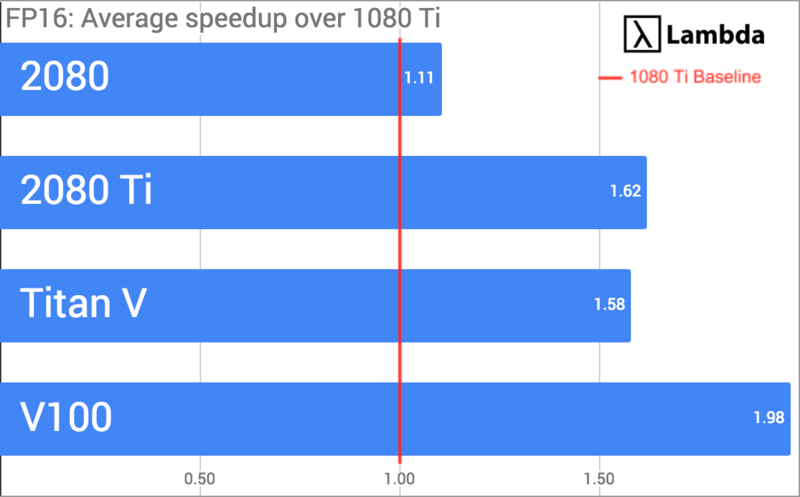

TensorFlow Performance with 1-4 GPUs -- RTX Titan, 2080Ti, 2080, 2070, GTX 1660Ti, 1070, 1080Ti, and Titan V | Puget Systems

Install Tensorflow-GPU 2.0 with CUDA v10.0, cuDNN v7.6.5 for CUDA 10.0 on Windows 10 with NVIDIA Geforce GTX 1660 Ti. | by Suryatej MSKP | Medium

Forza Horizon 4 - The NVIDIA GeForce GTX 1660 Super Review, Feat. EVGA SC Ultra: Recalibrating The Mainstream Market

/f411c4ce016144ca8f282cdaeab387de/Tensorflow%20guidance.jpg)