How to Set Up Nvidia GPU-Enabled Deep Learning Development Environment with Python, Keras and TensorFlow

GPU No Longer Working in RStudio Server with Tensorflow-GPU for AWS - Machine Learning and Modeling - Posit Community

Best Deep Learning NVIDIA GPU Server in 2022 2023 – 8x water-cooled NVIDIA H100, A100, A6000, 6000 Ada, RTX 4090, Quadro RTX 8000 GPUs and dual AMD Epyc processors. In Stock. Customize and buy now

keras does not pick up tensorflow-gpu - Machine Learning and Modeling - Posit Forum (formerly RStudio Community)

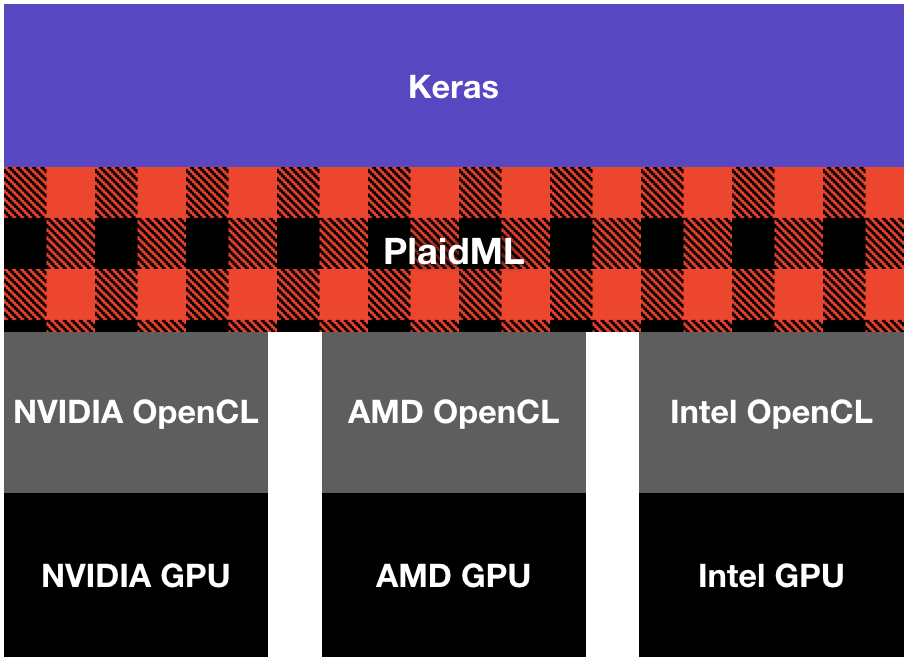

Use an AMD GPU for your Mac to accelerate Deeplearning in Keras | by Daniel Deutsch | Towards Data Science

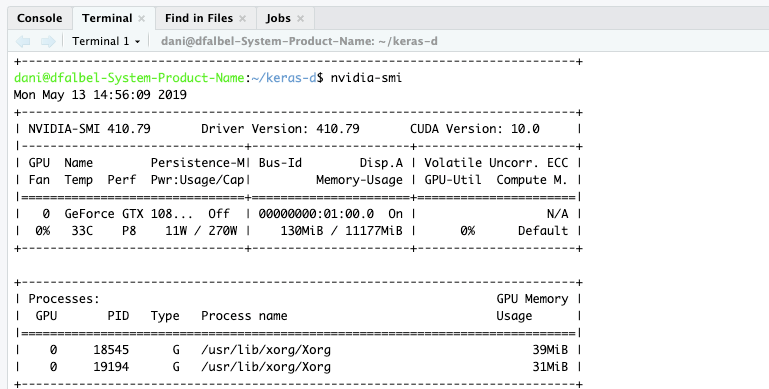

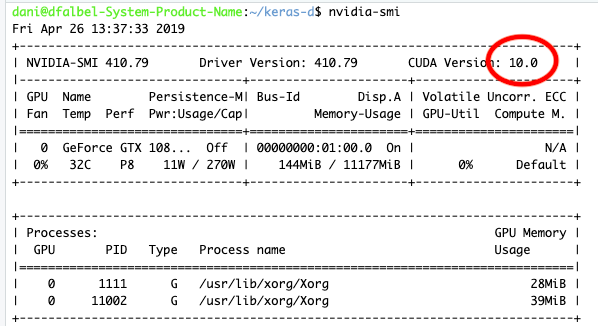

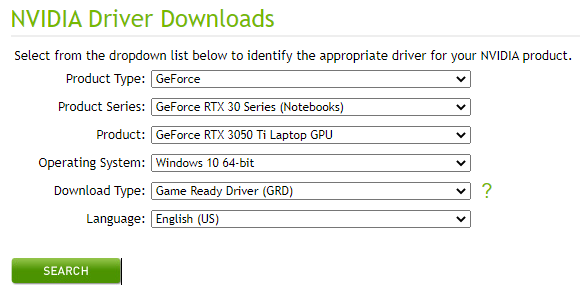

Setting up a Deep Learning Workplace with an NVIDIA Graphics Card (GPU) — for Windows OS | by Rukshan Pramoditha | Data Science 365 | Medium

Introducing Vultr Talon with NVIDIA GPUs — Cloud Platform Breakthrough Makes Accelerated Computing Efficient and Affordable

Setting up a Deep Learning Workplace with an NVIDIA Graphics Card (GPU) — for Windows OS | by Rukshan Pramoditha | Data Science 365 | Medium

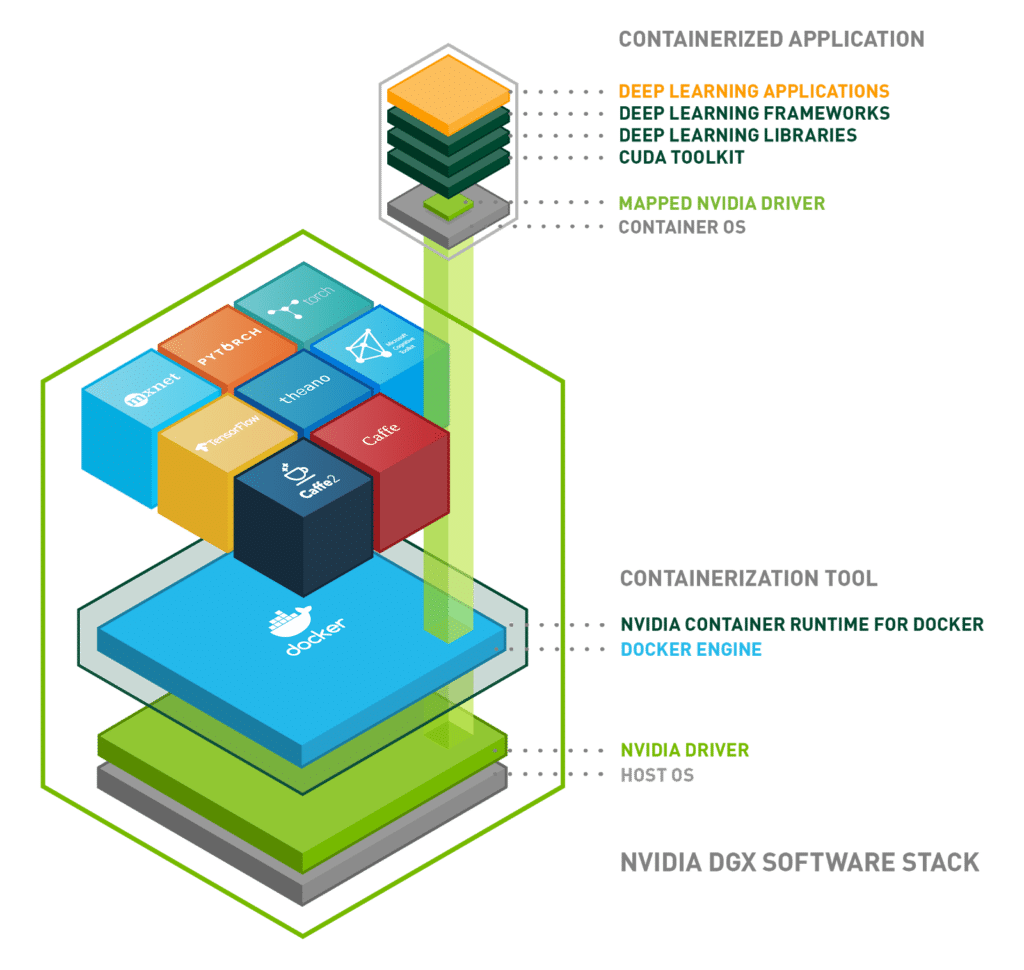

Building a Scaleable Deep Learning Serving Environment for Keras Models Using NVIDIA TensorRT Server and Google Cloud

Building a scaleable Deep Learning Serving Environment for Keras models using NVIDIA TensorRT Server and Google Cloud – R-Craft

Training Neural Network Models on GPU: Installing Cuda and cuDNN64_7.dll | Learn to train your models on GPU vs a CPU. Install Cuda and download their cuDNN64_7.dll to get it working. How