Speedup Python Pandas with RAPIDS GPU-Accelerated Dataframe Library called cuDF on Google Colab! - Bhavesh Bhatt

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

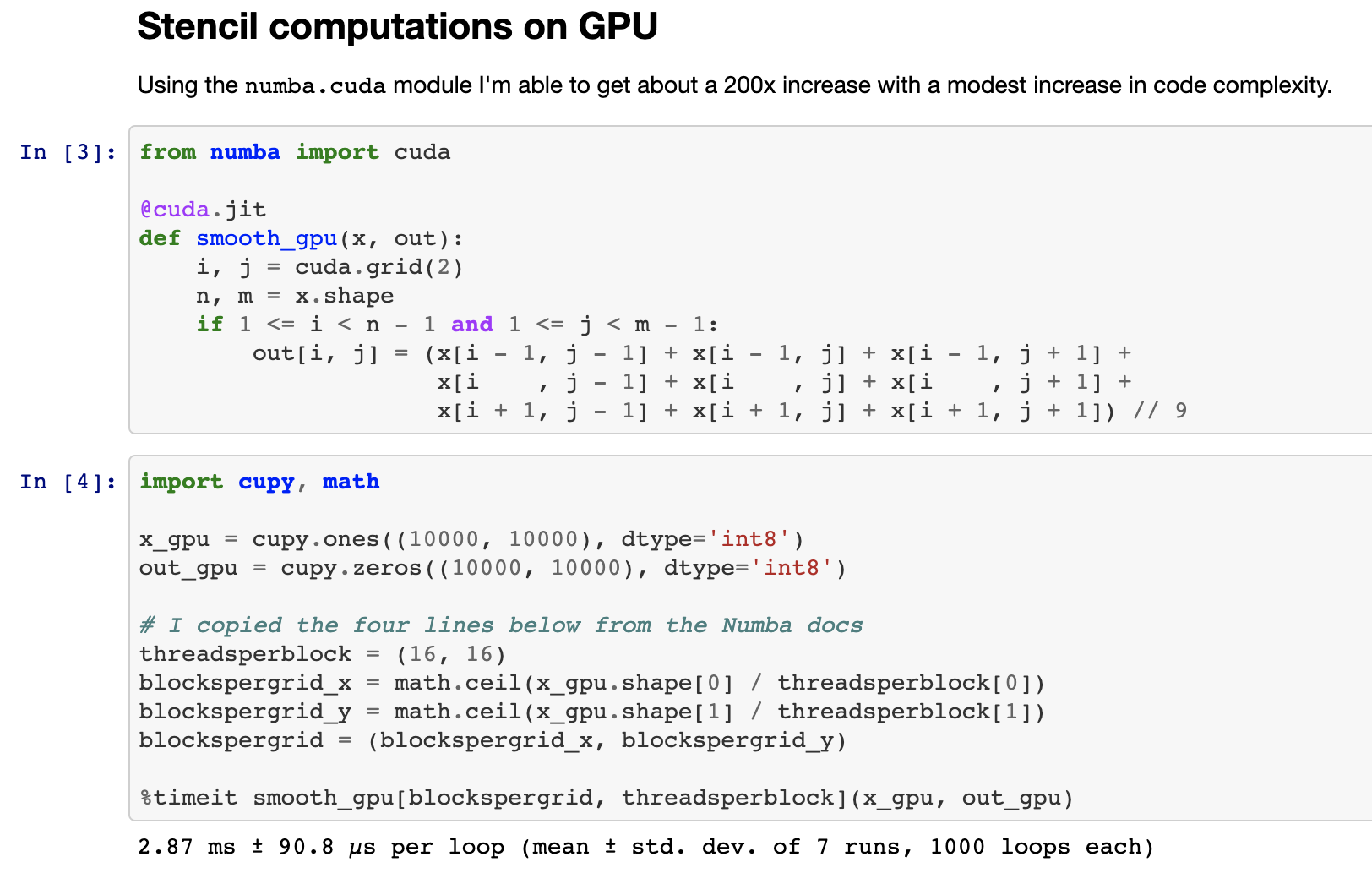

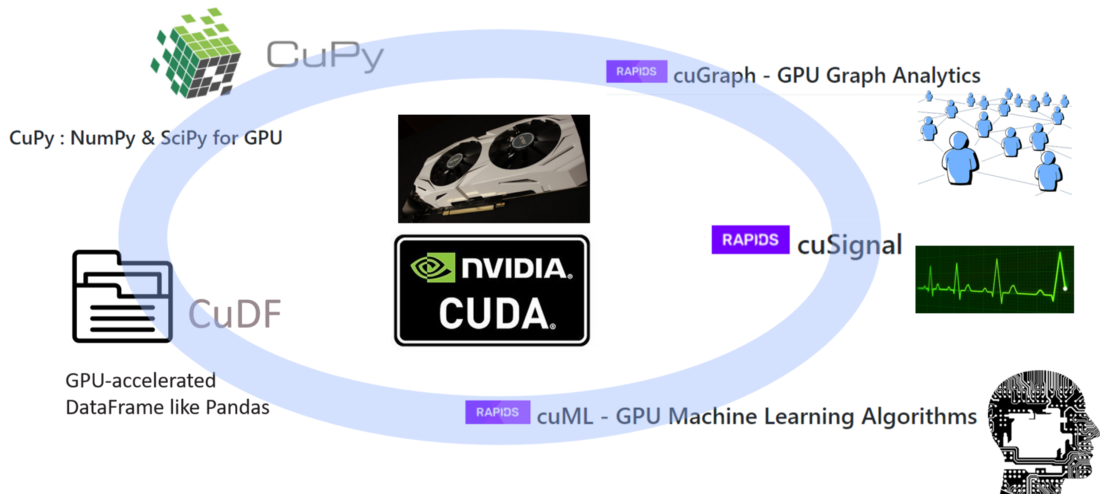

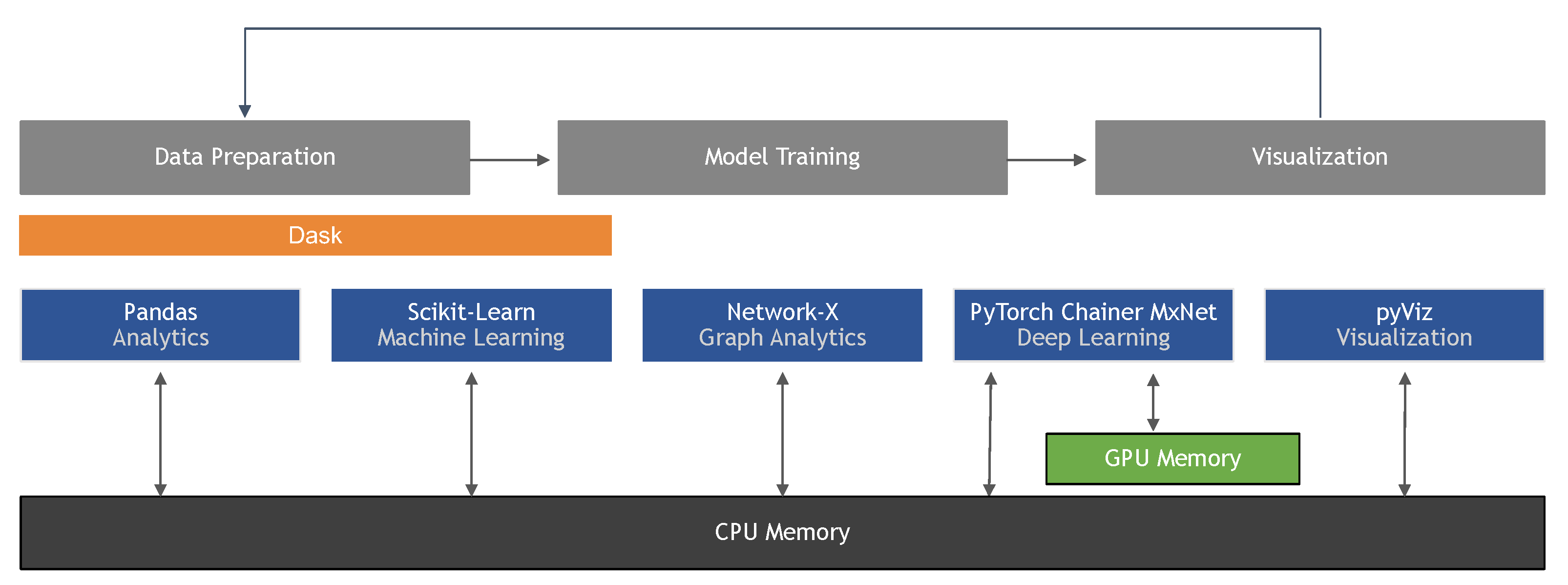

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

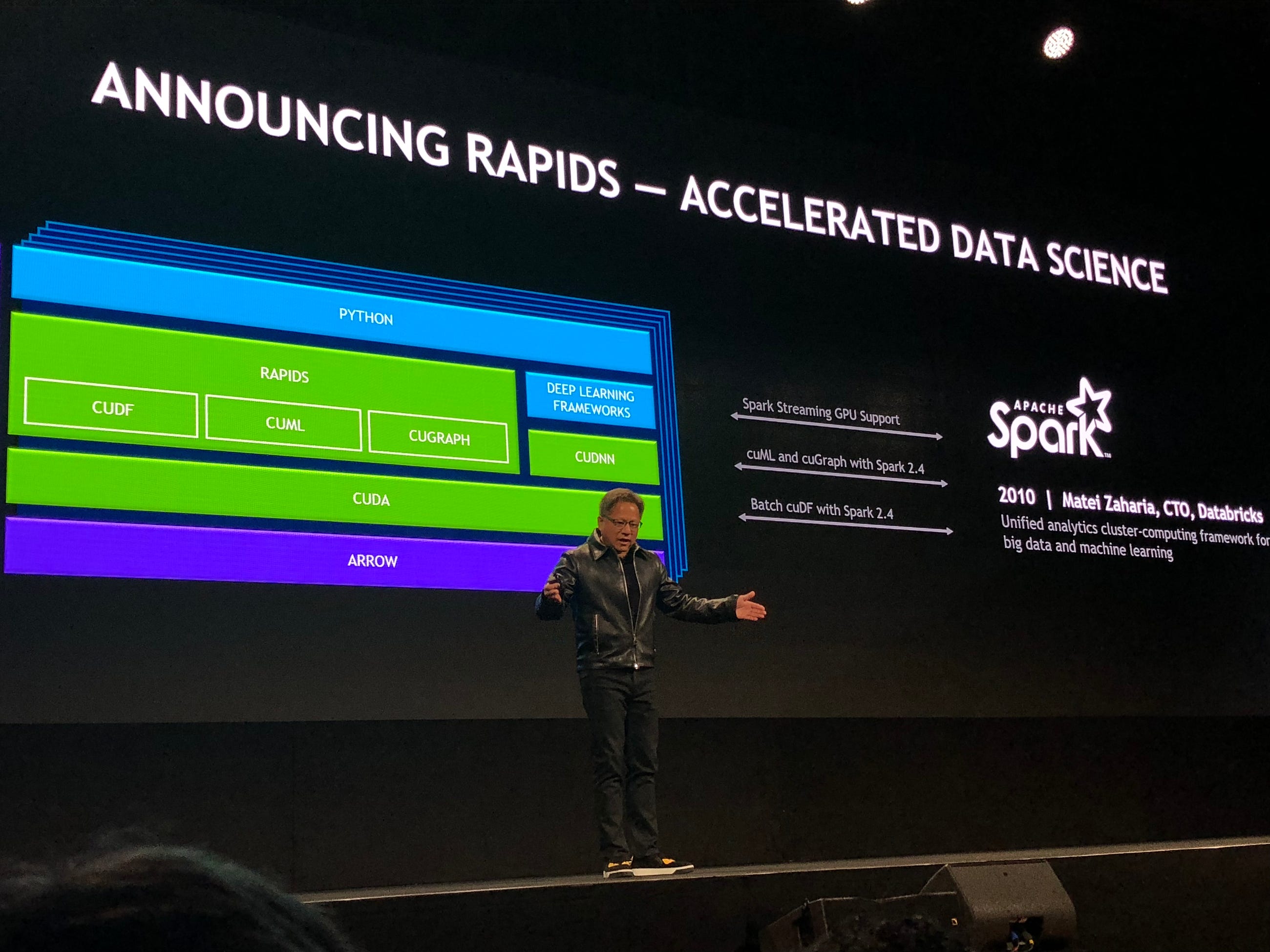

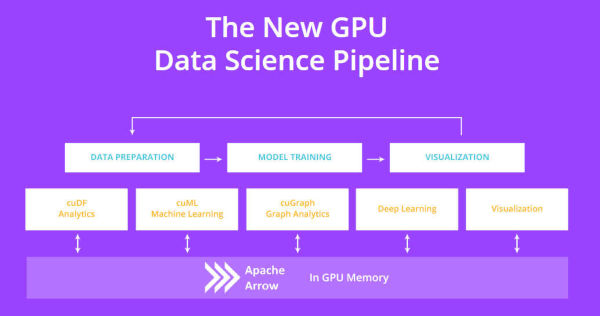

![PDF] GPU Acceleration of PySpark using RAPIDS AI | Semantic Scholar PDF] GPU Acceleration of PySpark using RAPIDS AI | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/117b8d9d02cf528932a6acf36cc9056c60f3b5f5/3-Figure2-1.png)

![PDF] GPU Acceleration of PySpark using RAPIDS AI | Semantic Scholar PDF] GPU Acceleration of PySpark using RAPIDS AI | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/117b8d9d02cf528932a6acf36cc9056c60f3b5f5/3-Figure3-1.png)